For the above model we can choose the optimal value of K any value between 6 to 14 as the accuracy is highest for this range as 8 and retrain the model as follows. These rules are easily interpretable and thus these classifiers are generally used to generate descriptive models.

Bayes Classifier Machine Learning Youtube

Some Reference For Understanding Bayes Optimal Classifier R Learnmachinelearning

13 Bayes Optimal Classifier Solved Youtube

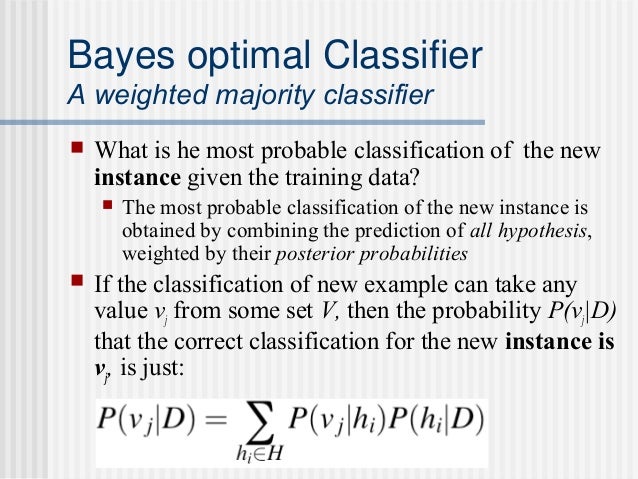

Suppose a pair takes values in where is the class label of This means that the conditional distribution of X given that the label Y takes the value r is given by for where means is distributed as and where denotes a probability distributionA classifier is a rule that assigns to an observation Xx a guess or estimate of what the unobserved label Y.

Bayes optimal classifier. For reference on concepts repeated across the API see Glossary of Common Terms and API Elements. In the introduction to support vector machine classifier article we learned about the key aspects as well as the mathematical foundation behind SVM classifier. Perhaps the most widely used example is called the Naive Bayes algorithm.

The ROC curve for naive Bayes is generally lower than the other two ROC curves which indicates worse in-sample performance than the other two classifier methods. The algorithm outputs an optimal hyperplane. He leads the STAIR STanford Artificial Intelligence Robot project whose goal is to develop a home assistant robot that can perform tasks such as tidy up a room loadunload a dishwasher fetch and deliver items and prepare meals using a.

We can use probability to make predictions in machine learning. For instance a well calibrated binary classifier should classify the samples such that among the samples to which it gave a predict_proba value close to 08 approximately 80 actually belong to the positive class. In this short notebook we will re-use the Iris dataset example and implement instead a Gaussian Naive Bayes classifier using pandas numpy and scipystats libraries.

In this blog I will cover how y o u can implement a Multinomial Naive Bayes Classifier for the 20 Newsgroups dataset. Please refer to the full user guide for further details as the class and function raw specifications may not be enough to give full guidelines on their uses. A probabilistic framework referred to as MAP that finds the most probable hypothesis for a training.

Results are then compared to the Sklearn implementation as a sanity check. It is described using the Bayes Theorem that provides a principled way for calculating a conditional probability. The naive Bayes optimal classifier is a version of this that assumes that the data is conditionally independent on the class and makes the.

Base classes and utility functions. SHAP SHapley Additive exPlanations by Lundberg and Lee 2016 69 is a method to explain individual predictions. The 20 newsgroups dataset comprises around 18000 newsgroups posts on 20 topics split in two subsets.

Browse other questions tagged bayesian classification loss-functions naive-bayes bayes-risk or ask your own question. The Naive Bayes assumption implies that the words in an email are conditionally independent given that you know that an email is spam or not. One for training or development and the other one for testing or for performance evaluation.

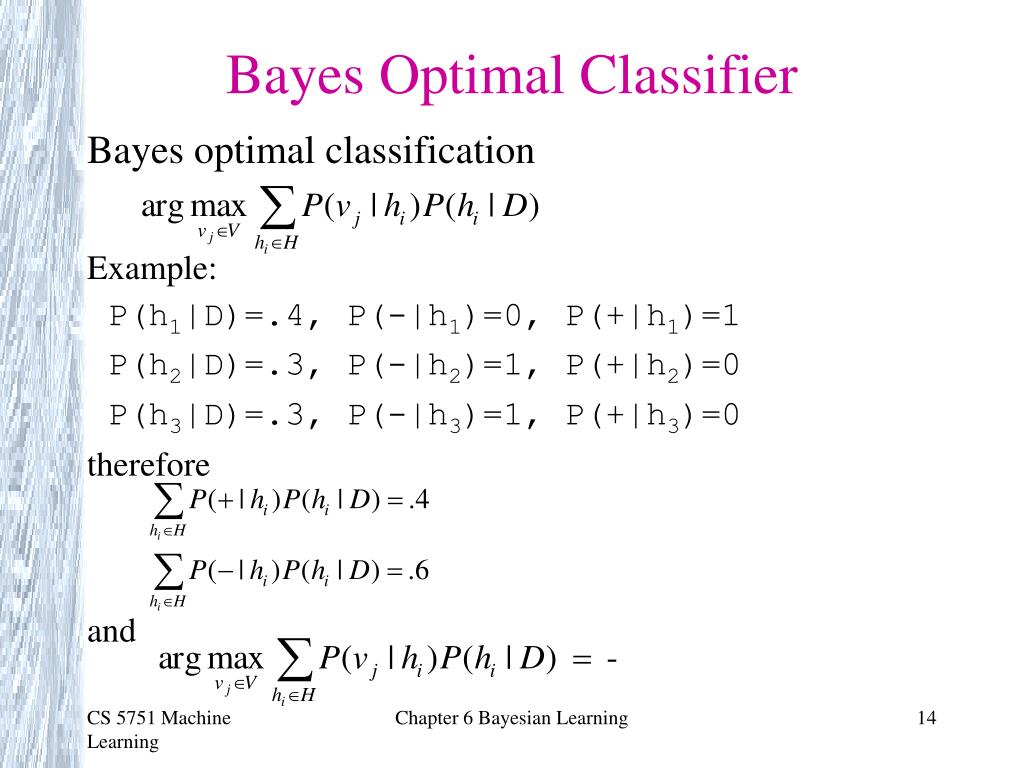

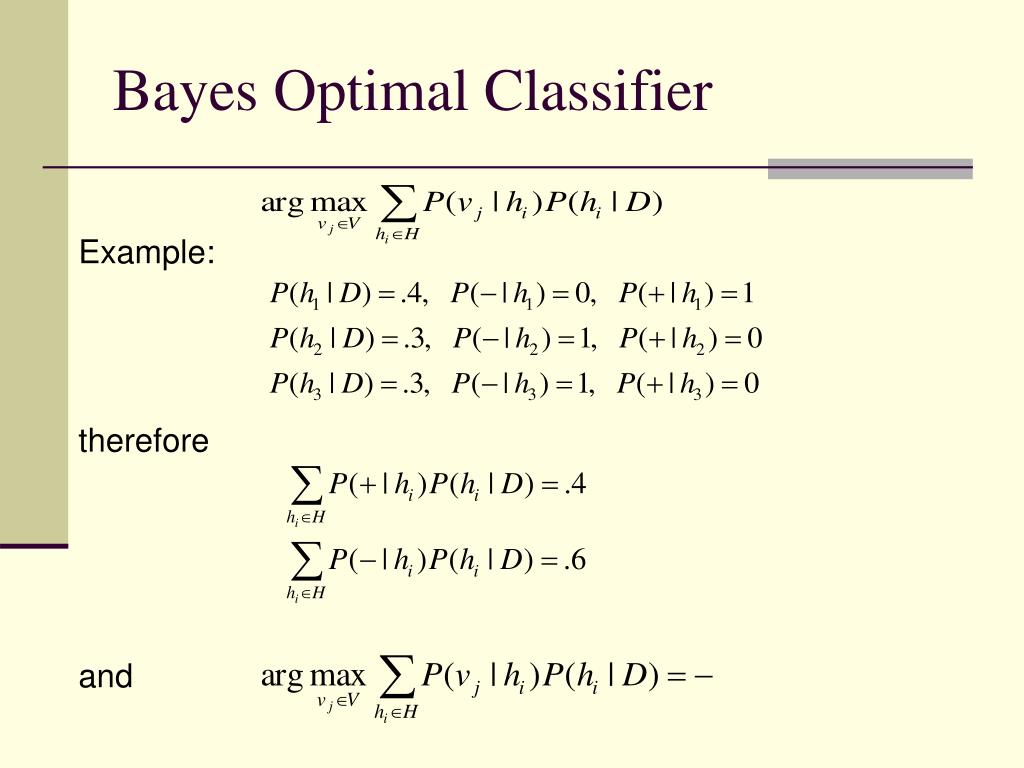

The Bayes optimal classifier is a classification technique. On average no other ensemble can outperform it. Naive Bayes is a classification algorithm for binary and multi-class classification problems.

SHAP is based on the game theoretically optimal Shapley Values. Although SVM produces better ROC values for higher thresholds logistic regression is usually better at distinguishing the bad radar returns from the good ones. The Naive Bayes classifier assumes that the presence of a feature in a class is not related to any other feature.

It is an ensemble of all the hypotheses in the hypothesis space. Assume and this is almost never the case you knew mathrmPymathbfx then you would simply predict the most likely label. For example a setting where the Naive Bayes classifier is often used is spam filtering.

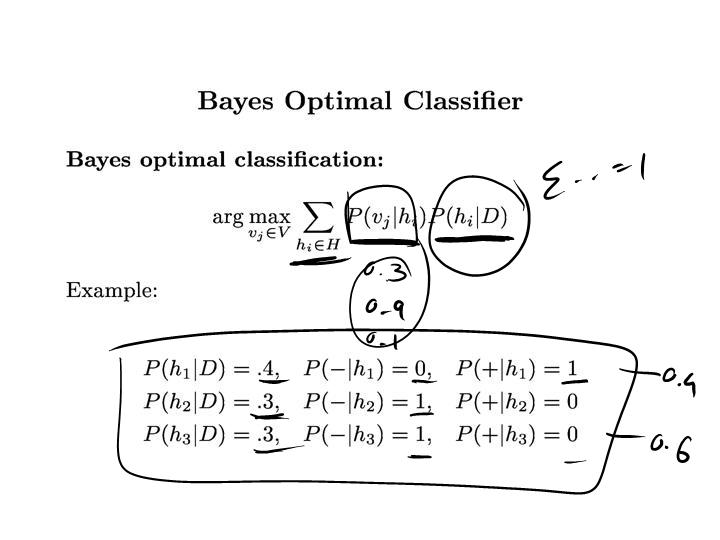

96 SHAP SHapley Additive exPlanations. In this article we are going to build a Support Vector Machine Classifier using the R programming language. Brief digression Bayes optimal classifier Example.

Artificial intelligence is having more effect is machine realizing 32 which creates calculations ready to take in examples and choice standards from information. It is also closely related to the Maximum a Posteriori. All we need to get started is to instantiate a BayesianOptimization object specifying a function to be optimized f and its parameters with their corresponding bounds pboundsThis is a constrained optimization technique so you must specify the minimum and maximum values that can be probed for each parameter in order for it to work.

We shall compare the results with the Naive Bayes Classfier. The condition used with if is called the. The hyperplane with maximum margin is called the optimal hyperplane.

Rule-based classifiers are just another type of classifier which makes the class decision depending by using various ifelse rules. Classifier KNeighborsClassifiern_neighbors 8 classifierfitX_train y_train Output. Here the data is emails and the label is spam or not-spam.

Next Topic Naïve Bayes Classifier. A Support Vector Machine SVM is a discriminative classifier formally. Based on prior knowledge of conditions that may be related to an event Bayes theorem describes the probability of the event.

This chapter is currently only available in this web version. Not only is it straightforward to understand but it also achieves. Refinement loss can be defined as the expected optimal loss as measured by the area under the optimal cost curve.

There are two reasons why SHAP got its own chapter and is not a subchapter of. The naive Bayes algorithm does not use the prior class probabilities during training. This is the class and function reference of scikit-learn.

In this tutorial you are going to learn about the Naive Bayes algorithm including how it works and how to implement it from scratch in Python without libraries. The exactness got for AdaBoost calculation with choices stump as a base classifier is 8072 which is more note worthy contrasted with that of Support Vector Machine Naive Bayes and Decision Tree. The Bayes Optimal Classifier is a probabilistic model that makes the most probable prediction for a new example.

The software treats the predictors as independent given a class and by default fits them using normal distributions. Naïve Bayes text classification has been used in industry and academia for a long time introduced by Thomas Bayes between 1701-1761. Mdl is a trained ClassificationNaiveBayes classifier and some of its properties appear in the Command Window.

Ngs research is in the areas of machine learning and artificial intelligence. Featured on Meta Reducing the weight of our footer. We import the classifier model from the sklearn library and fit the model by initializing K4.

If data is linearly arranged then we can separate it by using a straight line but for non-linear data we cannot draw a single straight line. Support Vector Machine Classifier implementation in R with the caret package. So we have achieved an accuracy of 032 here.

TextThe Bayes optimal classifier predicts y h_mathrmoptmathbfx operatornameargmax_y Pymathbfx Although the Bayes optimal classifier is as good as it gets it still can make. Naive Bayes Classifier NBC is generative model which is widely used in Information Retrieval. However this technique is being studied since the 1950s for text and document categorization.

Ebook and print will follow.

1 1 Bayes Theorem 2 Map Ml Hypothesis 3 Bayes Optimal Naive Bayes Classifiers Ies 511 Machine Learning Dr Turker Ince Lecture Notes By Prof T M Ppt Download

Html5 Video Slideshow

Bayes Optimal Classifier Integral Formula Mathematics Stack Exchange

Bayes Classification

Ppt Bayesian Learning Powerpoint Presentation Free Download Id 6728108

The Optimal Classifier An Introduction To The Bayes Classifier By Andreas Maier Codex Medium

Ppt A Survey On Using Bayes Reasoning In Data Mining Powerpoint Presentation Id 4002015

Bayesian Learning For Machine Learning By Yash Rawat Datadriveninvestor